Halo orang ti pemadu dikesayau, actually I wanted to send a paper and article around for seam carving (image resizing without losing important parts)…but apparently we already had that in 2021 (I forgot – if you did as well, check it out here)

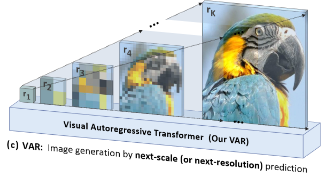

So I decided to try to understand how gpt-4o image mode works, and found this weeks paper. It shows how you can use a tranformer model (the ones which power large language models) to create an ever increasing resolution of an image…and apparently that gives better prompt following than diffusion models (behind stable diffusion or flux) have. The paper is okay-ish to read, but as always I added you an additional explainer into the links section.

Abstract:

We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine “next-scale prediction” or “next-resolution prediction”, diverging from the standard raster-scan “next-token prediction”. This simple, intuitive methodology allows autoregressive (AR) transformers to learn visual distributions fast and can generalize well: VAR, for the first time, makes GPT-style AR models surpass diffusion transformers in image generation. On ImageNet 256×256 benchmark, VAR significantly improve AR baseline by improving Fréchet inception distance (FID) from 18.65 to 1.73, inception score (IS) from 80.4 to 350.2, with 20× faster inference speed. It is also empirically verified that VAR outperforms the Diffusion Transformer (DiT) in multiple dimensions including image quality, inference speed, data efficiency, and scalability. Scaling up VAR models exhibits clear power-law scaling laws similar to those observed in LLMs, with linear correlation coefficients near −0.998 as solid evidence. VAR further showcases zero-shot generalization ability in downstream tasks including image in-painting, out-painting, and editing. These results suggest VAR has initially emulated the two important properties of LLMs: Scaling Laws and zero-shot generalization. We have released all models and codes to promote the exploration of AR/VAR models for visual generation and unified learning.

Download Link:

https://arxiv.org/pdf/2404.02905

Additional Links:

- YouTube Video Showcasing and Explaining the Paper